Introduction:

Amazon Athena is an interactive query service that makes it easy to analyze data in Amazon S3 using standard SQL. Athena is easy to use. Simply point to your data in Amazon S3, define the schema, and start querying using standard SQL. Most results are delivered within seconds. With Athena, there’s no need for complex ETL jobs to prepare your data for analysis.

About Athena Features:

- Athena is serverless, so there is no infrastructure to manage, and you pay only for the queries that you run.

- With a few actions in the AWS Management Console, you can point Athena at your data stored in Amazon S3 and begin using standard SQL to run ad-hoc queries and get results in seconds.

- When you run the query in Athena, that amount of data the query has to scanned in order to give you the results that drives the cost.

- In general, if your data has got larger storage size, it indirectly impacts amount of data scan and you have to pay higher cost.

- Athena query editor itself used for data exploration. Athena APIs is used to query data using APIs.

Athena charged for the number of bytes scanned by Amazon Athena, rounded up to the nearest megabyte, with a 10MB minimum per query. Thus, the aim is to run the query with least amount of data scanned.

We will run the same query before and after we do the optimization to see how these recommendations significantly reduce the amount of data scanned, and thus, reducing cost and improving performance.

There are 3 technics used for optimized data scanned:

- Limit row level scanning using data partition method.

- Limit column level scanning using parquet format.

- Limit row level scanning using data bucketing method.

Data partitioning:

- Partition the data, which relates to how the data files are organized in the storage system for a data lake.

- Data partitioning splits the data from a table to be grouped together in different folders, based on one or more of the columns in the dataset.

- Data is physically partitioned based on a field.

- Partition field should be defined based on key filter criteria.

- Partition fields can be hierarchical.

Parquet Format:

- Parquet files are columnar-based, meaning that the contents of the file are physically stored to have data grouped by columns, rather than grouped by rows as with most file formats.

- Parquet files also contain metadata about the data they store.

- Compressed format saves storage.

- Column wise storage limits column level scan.

- These performance improvements and cost savings, a very common transformation is to convert incoming files from their original format (such as CSV, JSON, XML, and so on) into the analytics-optimized Parquet format.

Hands-on:

- Sign in to the AWS Management Console and open the Amazon S3 console.

- After that we created ‘prisoft-trio-bucket’ , in this bucket created a folder ‘blogdata’ and Uploaded a yellow_tripdata_2020-06.csv file and it contains 47.9 MB . See the screenshot below.

- Open the AWS Management Console for Athena.

- In this query editor, we created a table ‘yellow_pp’ and store it in the ‘s3://prisoft-trio-bucket/blogdata/’ location, by using below query:

CREATE EXTERNAL TABLE IF NOT EXISTS `prisoft_trio_db`.`yellow_pp` (

`vendorid` int,

`tpep_pickup_datetime` timestamp,

`tpep_dropoff_datetime` timestamp,

`passenger_count` int,

`trip_distance` int,

`ratecodeid` int,

`pulocationid` int,

`dolocationid` int,

`payment_type` int,

`fare_amount` int,

`extra` int,

`mta_tax` int,

`tip_amount` int,

`tolls_amount` int,

`improvement_surcharge` int,

`total_amount` int,

`congestion_surcharge` int

)

ROW FORMAT SERDE 'org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe'

WITH SERDEPROPERTIES ( 'serialization.format' = ',', 'field.delim' = ','

)

LOCATION 's3://prisoft-trio-bucket/blogdata/'

TBLPROPERTIES ('has_encrypted_data'='false',"skip.header.line.count"="1");- To Check the amount of Data scanned, run the query: –

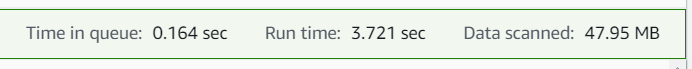

SELECT * FROM "prisoft_trio_db"."yellow_pp";- When the query executes, the below screenshot will show the amount of data Scanned and the runtime. We will compare different optimization methods based on these parameters.

- To compare the difference and benefit of partitioning, we can restrict the amount of data scanned by each query, thus improving performance and reducing cost.

- Execute the partitioning query to create a partitioned table by year and month and day.

CREATE TABLE yellow_pp_partitioned

WITH (

format = 'PARQUET',

parquet_compression = 'SNAPPY',

external_location = 's3://prisoft-trio-bucket/blogdata_partition/',

partitioned_by = ARRAY['year','month','day']

)

AS

SELECT vendorid, tpep_pickup_datetime, tpep_dropoff_datetime, passenger_count, trip_distance,

ratecodeid, pulocationid, dolocationid, payment_type, fare_amount, extra, mta_tax, tip_amount,

tolls_amount, improvement_surcharge, total_amount, congestion_surcharge,

Year(tpep_pickup_datetime) as "year",month(tpep_pickup_datetime) as "month",day(tpep_pickup_datetime) as "day"

FROM "prisoft_trio_db"."yellow_pp";- In the above screenshot we had to extract the year and month from a string column.

- Let’s run the same query on the optimized data to compare the difference: –

- Without partitioning query will take 2.629 sec and data scanned whole file that is 47.95 MB.

- And by using data partitioning, query will take 2.405 sec and data scanned is 1.71 MB.

Bucketing Data:

Bucketing is a concept that is related to partitioning. However, with bucketing, you group rows of data together in a specified number of buckets, based on the hash value of a column (or columns) that you specify.

Hands-on:

- On Aws console ‘prisoft-trio-bucket’ bucket, in this bucket create a folder ‘Bucketing_blog’.

- In Athena query editor, we created a table ‘bucketing_pp ‘and store it in the ‘s3://prisoft-trio-bucket/Bucketing_blog/’ location, by using below query: –

CREATE TABLE bucketing_pp

WITH (

format = 'PARQUET',

external_location = 's3://prisoft-trio-bucket/bucketingblog/',

bucketed_by = ARRAY['vendorid'],

bucket_count = 3

)

AS

SELECT vendorid, tpep_pickup_datetime, tpep_dropoff_datetime, passenger_count, trip_distance,

ratecodeid, pulocationid, dolocationid, payment_type, fare_amount, extra, mta_tax, tip_amount,

tolls_amount, improvement_surcharge, total_amount, congestion_surcharge,

Year(tpep_pickup_datetime) as "year",month(tpep_pickup_datetime) as "month",day(tpep_pickup_datetime) as "day"

FROM "prisoft_trio_db"."yellow_pp";- To compare the difference and benefit of partitioning and bucketing used blow query: –

By using partitioning method, data scanned takes 153.93 KB and 1.383 sec.

Below screenshot will show the bucketing data, 5.56 KB data scanned and 1.174 sec run time.

Conclusion:

By partitioning our data, we will significantly reduce the amount of data scanned, and thus, reducing Athena costs and improving performance.

Bucketing distributes the data to different files within a partition. So, if we want to filter a partition, Athena will only scan the relevant bucket files not the whole partition.

File optimization, Data Partitioning, Data Bucketing brings significant performance improvements and cost optimization to query processing in Amazon Athena.